| CV |

Email |

Google Scholar |

|

I am a Research Engineer at General Robotics, where I am forturnate to work with Dr. Jonathan Huang and other talented people. I am currently working on robot manipulation, especially Vision-Language Action models, and their application in industrial cases. Previously, I finished my Master in Robotics at Carnegie Mellon University, advised by Prof. Katia Sycara. During Master study, I worked on multi-modal perception and Large Vision-Language Models. My research interests lie in the intersection of robotics and computer vision, especially in multi-modal learning, Vision-Language Models, Robot Manipulation, and efficient AI. Email: zifuw [AT] andrew.cmu.edu |

|

website |

abstract |

bibtex |

arXiv |

code

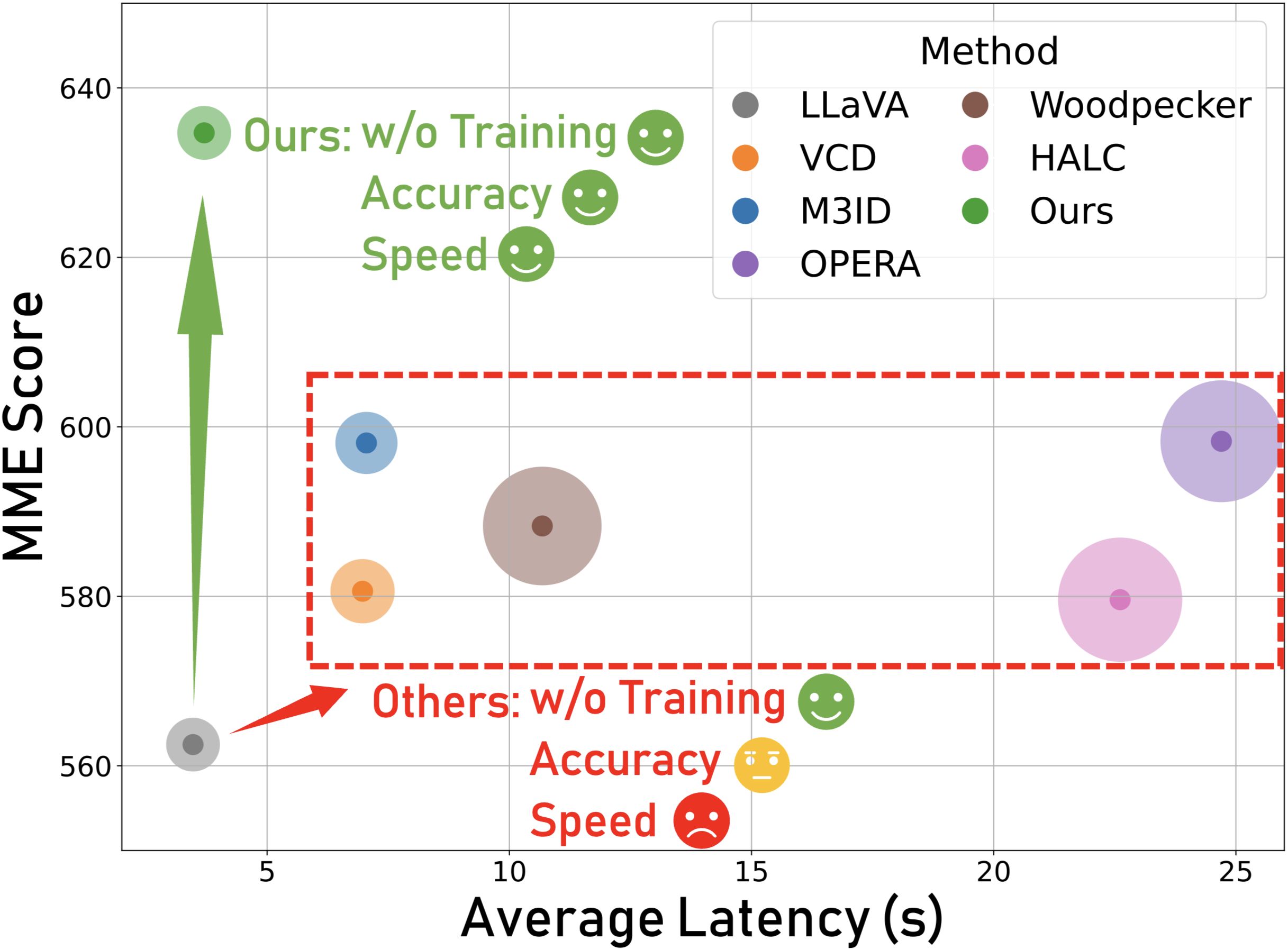

Recent Large Vision-Language Models (LVLMs) have introduced a new paradigm for understanding and reasoning about image input through textual responses. Although they have achieved remarkable performance across a range of multi-modal tasks, they face the persistent challenge of hallucination, which introduces practical weaknesses and raises concerns about their reliable deployment in real-world applications. Existing work has explored contrastive decoding approaches to mitigate this issue, where the output of the original LVLM is compared and contrasted with that of a perturbed version. However, these methods require two or more queries that slow down LVLM response generation, making them less suitable for real-time applications. To overcome this limitation, we propose ONLY, a training-free decoding approach that requires only a single query and a one-layer intervention during decoding, enabling efficient real-time deployment. Specifically, we enhance textual outputs by selectively amplifying crucial textual information using a text-to-visual entropy ratio for each token. Extensive experimental results demonstrate that our ONLY approach consistently outperforms state-of-the-art methods across various benchmarks while requiring minimal implementation effort and computational cost.

@InProceedings{Wan_2025_ICCV,

author = {Wan, Zifu and Zhang, Ce and Yong, Silong and Ma, Martin Q. and Stepputtis, Simon and Morency, Louis-Philippe and Ramanan, Deva and Sycara, Katia and Xie, Yaqi},

title = {ONLY: One-Layer Intervention Sufficiently Mitigates Hallucinations in Large Vision-Language Models},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2025},

pages = {3225-3234}

}

|

|

website |

abstract |

bibtex |

arXiv |

code

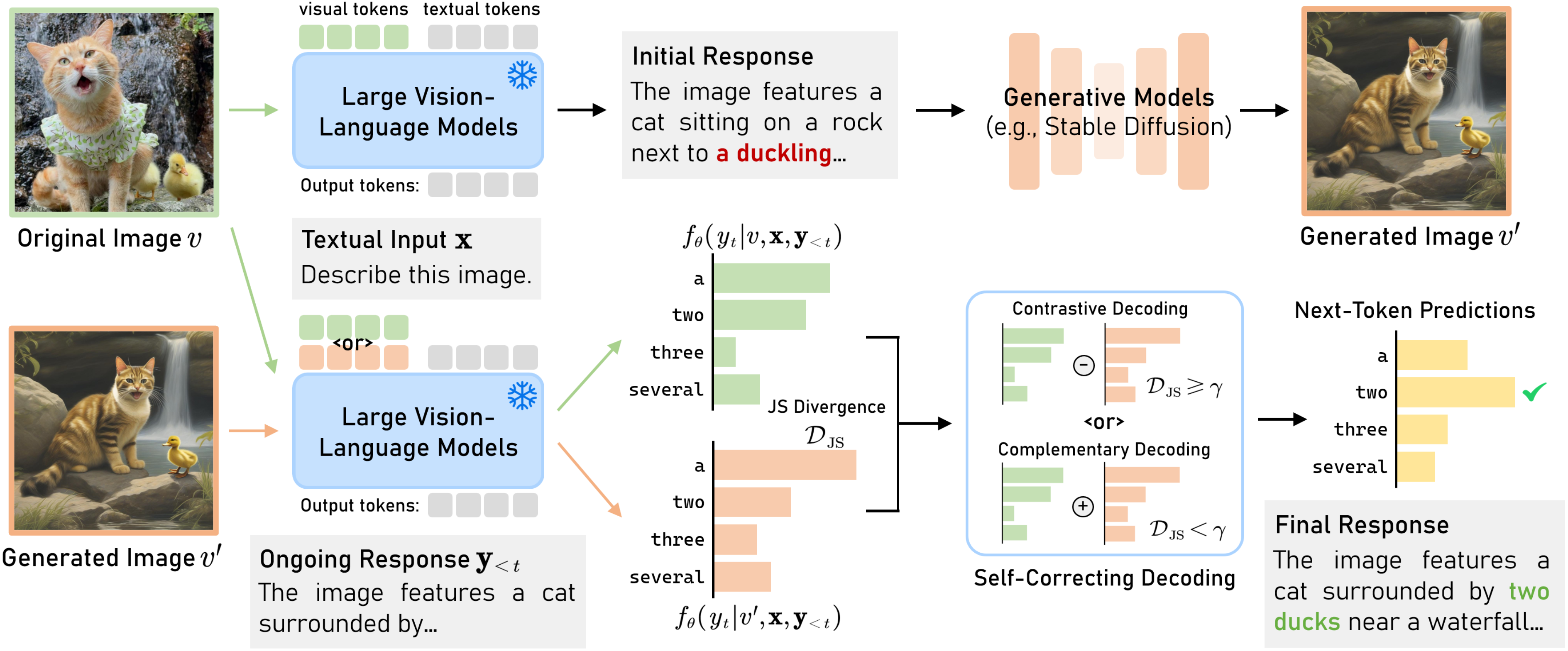

While recent Large Vision-Language Models (LVLMs) have shown remarkable performance in multi-modal tasks, they are prone to generating hallucinatory text responses that do not align with the given visual input, which restricts their practical applicability in real-world scenarios. In this work, inspired by the observation that the text-to-image generation process is the inverse of image-conditioned response generation in LVLMs, we explore the potential of leveraging text-to-image generative models to assist in mitigating hallucinations in LVLMs. We discover that generative models can offer valuable self-feedback for mitigating hallucinations at both the response and token levels. Building on this insight, we introduce self-correcting Decoding with Generative Feedback (DeGF), a novel training-free algorithm that incorporates generative feedback into the decoding process to effectively mitigate hallucinations. Specifically, DeGF generates an image from the initial response produced by LVLMs, which acts as an auxiliary visual reference and provides self-feedback to verify and, if necessary, correct the initial response. Extensive experimental results validate the effectiveness of our approach in mitigating diverse types of hallucinations, consistently surpassing state-of-the-art methods across two evaluated LVLMs and five benchmarks.

@inproceedings{zhang2025selfcorrecting,

title={Self-Correcting Decoding with Generative Feedback for Mitigating Hallucinations in Large Vision-Language Models},

author={Ce Zhang and Zifu Wan and Zhehan Kan and Martin Q. Ma and Simon Stepputtis and Deva Ramanan and Russ Salakhutdinov and Louis-Philippe Morency and Katia P. Sycara and Yaqi Xie},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=tTBXePRKSx}

}

|

|

pdf |

abstract |

bibtex |

OpenReview |

code

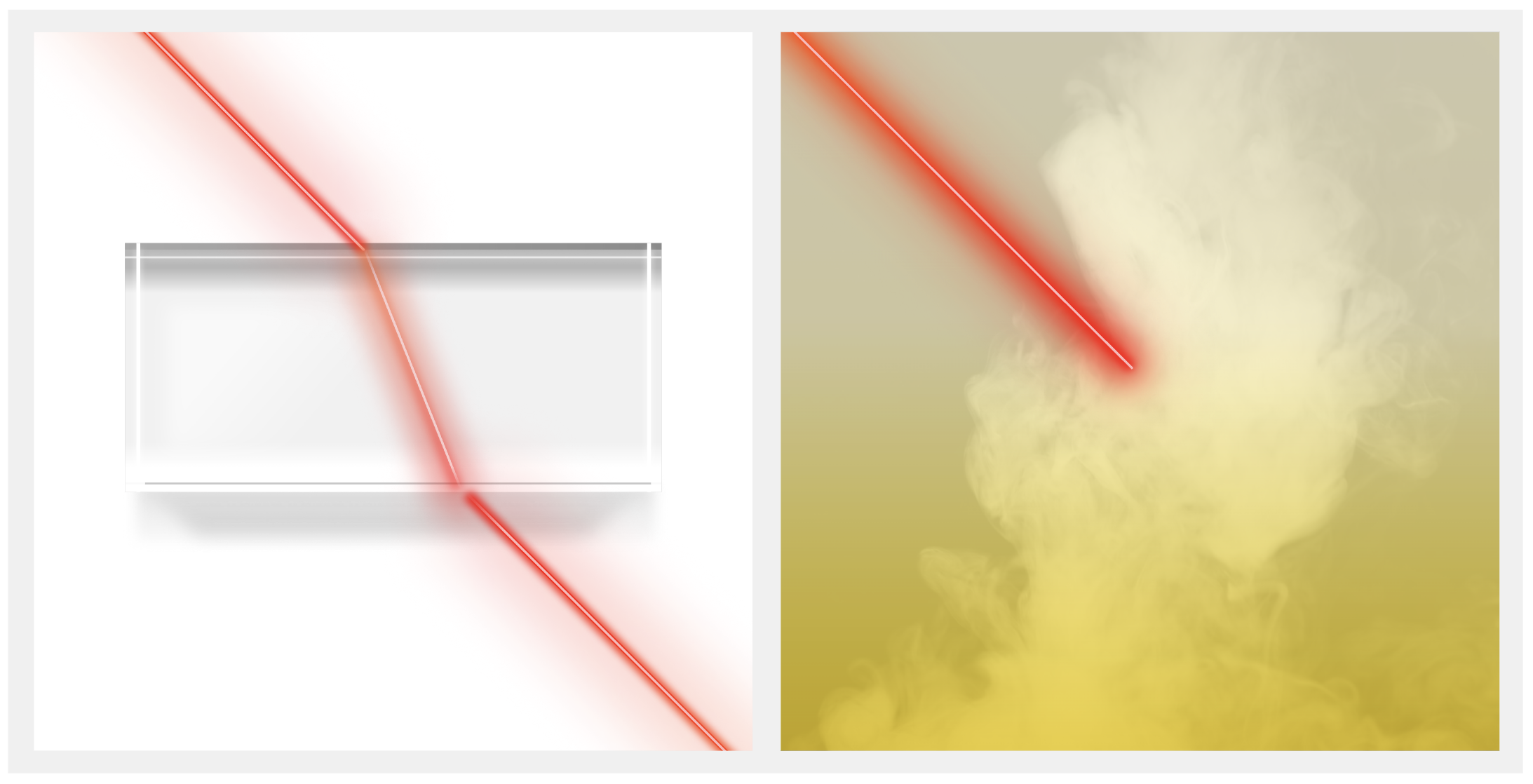

Decomposing geometry, materials and lighting from a set of images, namely inverse rendering, has been a long-standing problem in computer vision and graphics. Recent advances in neural rendering enable photo-realistic and plausible inverse rendering results. The emergence of 3D Gaussian Splatting has boosted it to the next level by showing real-time rendering potentials. An intuitive finding is that the models used for inverse rendering do not take into account the dependency of opacity w.r.t. material properties, namely cross section, as suggested by optics. Therefore, we develop a novel approach that adds this dependency to the modeling itself. Inspired by radiative transfer, we augment the opacity term by introducing a neural network that takes as input material properties to provide modeling of cross section and a physically correct activation function. The gradients for material properties are therefore not only from color but also from opacity, facilitating a constraint for their optimization. Therefore, the proposed method incorporates more accurate physical properties compared to previous works. We implement our method into 3 different baselines that use Gaussian Splatting for inverse rendering and achieve significant improvements universally in terms of novel view synthesis and material modeling.

@article{yong2025omg,

title={OMG: Opacity Matters in Material Modeling with Gaussian Splatting},

author={Yong, Silong and Manivannan, Venkata Nagarjun Pudureddiyur and Kerbl, Bernhard and Wan, Zifu and Stepputtis, Simon and Sycara, Katia and Xie, Yaqi},

journal={arXiv preprint arXiv:2502.10988},

year={2025}

}

|

|

pdf |

abstract |

bibtex |

arXiv |

code |

X

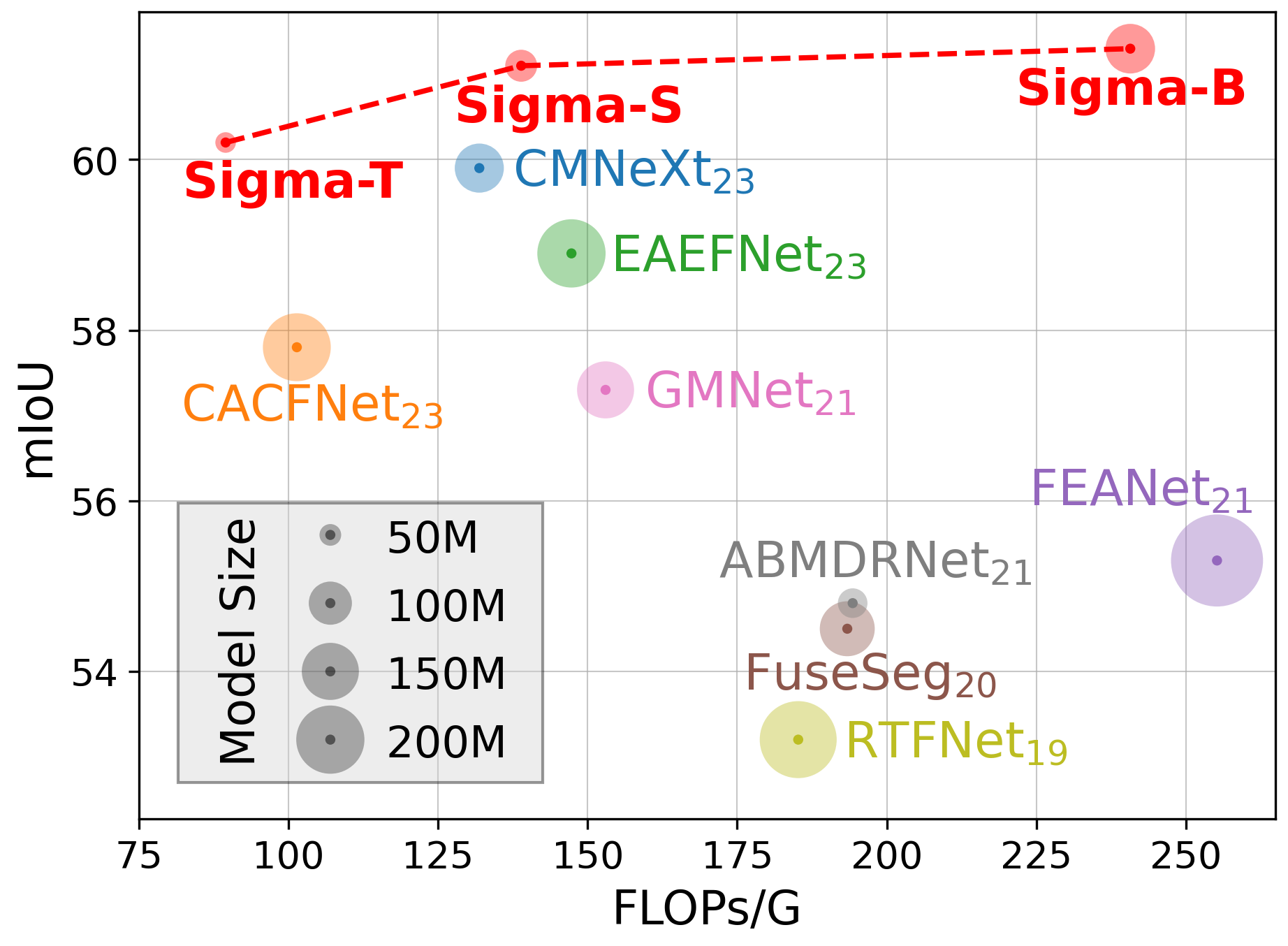

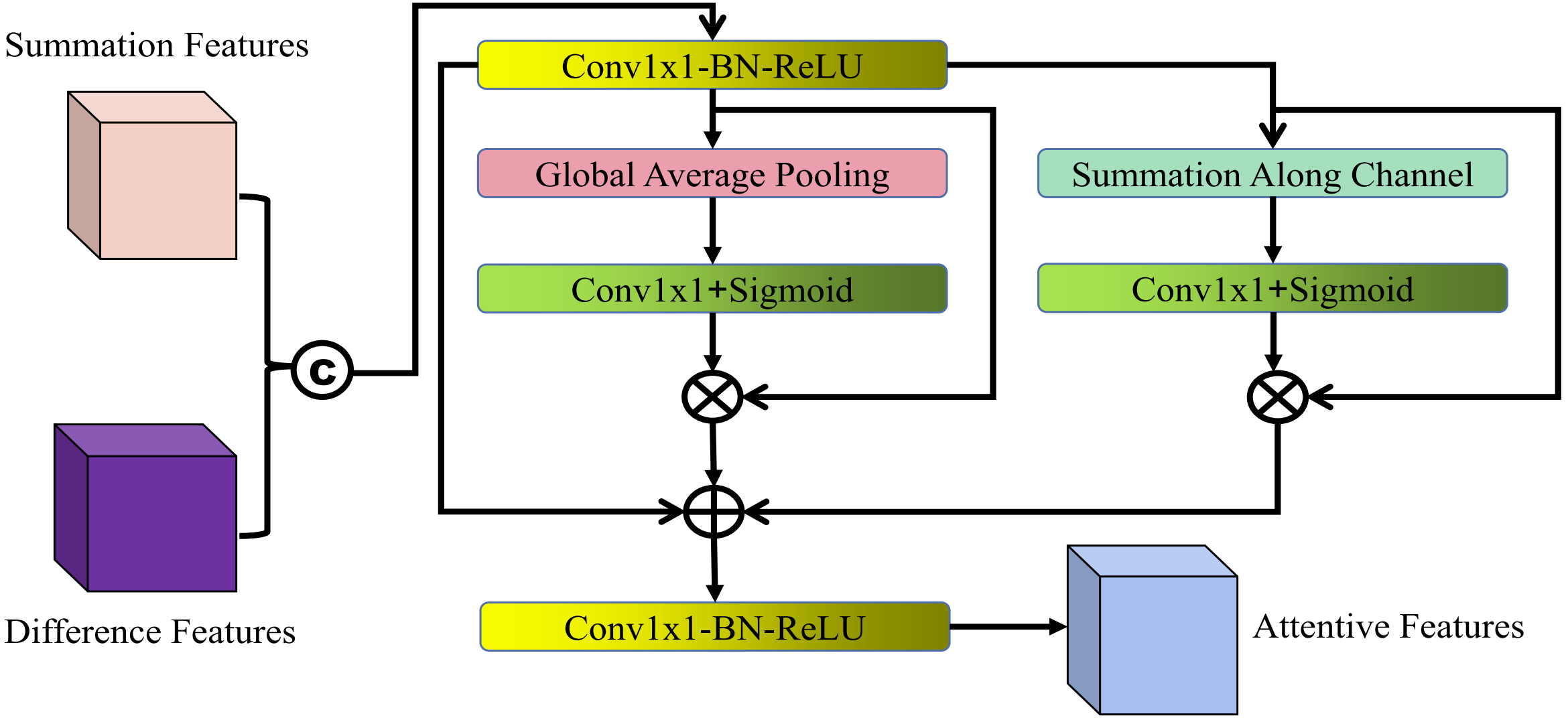

Multi-modal semantic segmentation significantly enhances AI agents' perception and scene understanding, especially under adverse conditions like low-light or overexposed environments. Leveraging additional modalities (X-modality) like thermal and depth alongside traditional RGB provides complementary information, enabling more robust and reliable segmentation. In this work, we introduce Sigma, a Siamese Mamba network for multi-modal semantic segmentation, utilizing the Selective Structured State Space Model, Mamba. Unlike conventional methods that rely on CNNs, with their limited local receptive fields, or Vision Transformers (ViTs), which offer global receptive fields at the cost of quadratic complexity, our model achieves global receptive fields coverage with linear complexity. By employing a Siamese encoder and innovating a Mamba fusion mechanism, we effectively select essential information from different modalities. A decoder is then developed to enhance the channel-wise modeling ability of the model. Our method, Sigma, is rigorously evaluated on both RGB-Thermal and RGB-Depth segmentation tasks, demonstrating its superiority and marking the first successful application of State Space Models (SSMs) in multi-modal perception tasks. Code is available at this URL.

@article{wan2024sigma,

title={Sigma: Siamese Mamba Network for Multi-Modal Semantic Segmentation},

author={Wan, Zifu and Wang, Yuhao and Yong, Silong and Zhang, Pingping and Stepputtis, Simon and Sycara, Katia and Xie, Yaqi},

journal={arXiv preprint arXiv:2404.04256},

year={2024}

}

|

|

pdf |

abstract |

bibtex |

arXiv |

website

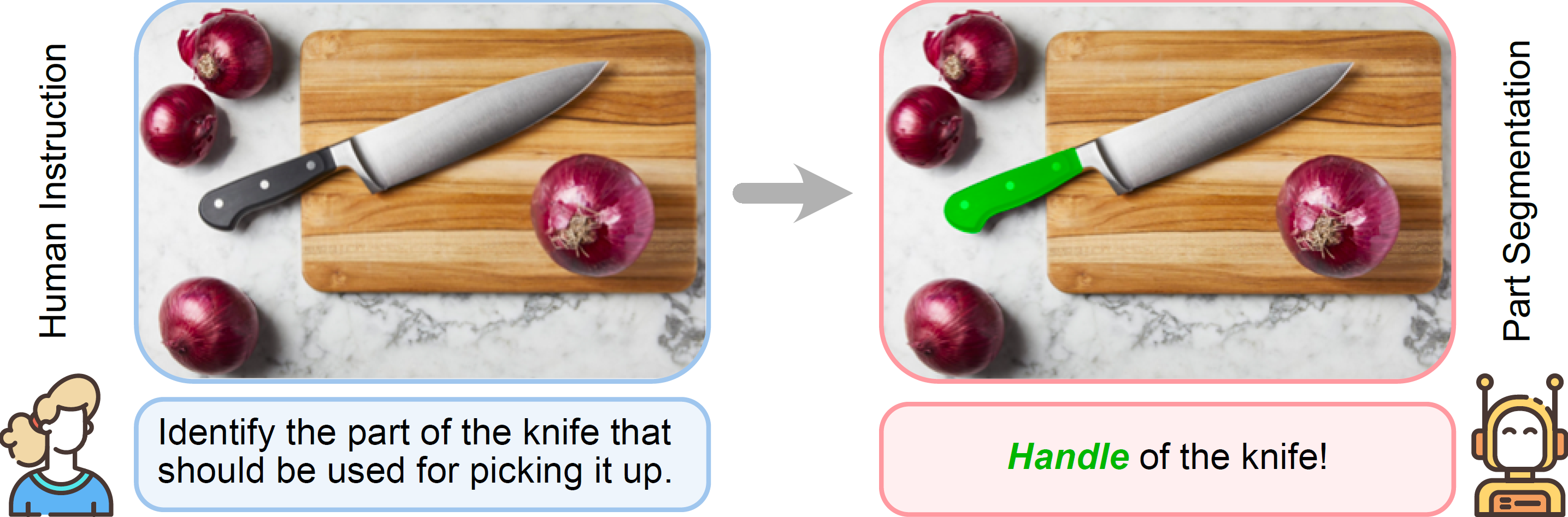

Large multimodal foundation models, particularly in the domains of language and vision, have significantly advanced various tasks, including robotics, autonomous driving, information retrieval, and grounding. However, many of these models perceive objects as indivisible, overlooking the components that constitute them. Understanding these components and their associated affordances provides valuable insights into an object's functionality, which is fundamental for performing a wide range of tasks. In this work, we introduce a novel real-world benchmark, InstructPart, comprising hand-labeled part segmentation annotations and task-oriented instructions to evaluate the performance of current models in understanding and executing part-level tasks within everyday contexts. Through our experiments, we demonstrate that task-oriented part segmentation remains a challenging problem, even for state-of-the-art Vision-Language Models (VLMs). In addition to our benchmark, we introduce a simple baseline that achieves a twofold performance improvement through fine-tuning with our dataset. With our dataset and benchmark, we aim to facilitate research on task-oriented part segmentation and enhance the applicability of VLMs across various domains, including robotics, virtual reality, information retrieval, and other related fields.

@article{wan2025instructpart,

title={InstructPart: Task-Oriented Part Segmentation with Instruction Reasoning},

author={Wan, Zifu and Xie, Yaqi and Zhang, Ce and Lin, Zhiqiu and Wang, Zihan and Stepputtis, Simon and Ramanan, Deva and Sycara, Katia},

journal={arXiv preprint arXiv:2505.18291},

year={2025}

}

|

|

pdf |

abstract |

bibtex |

arXiv

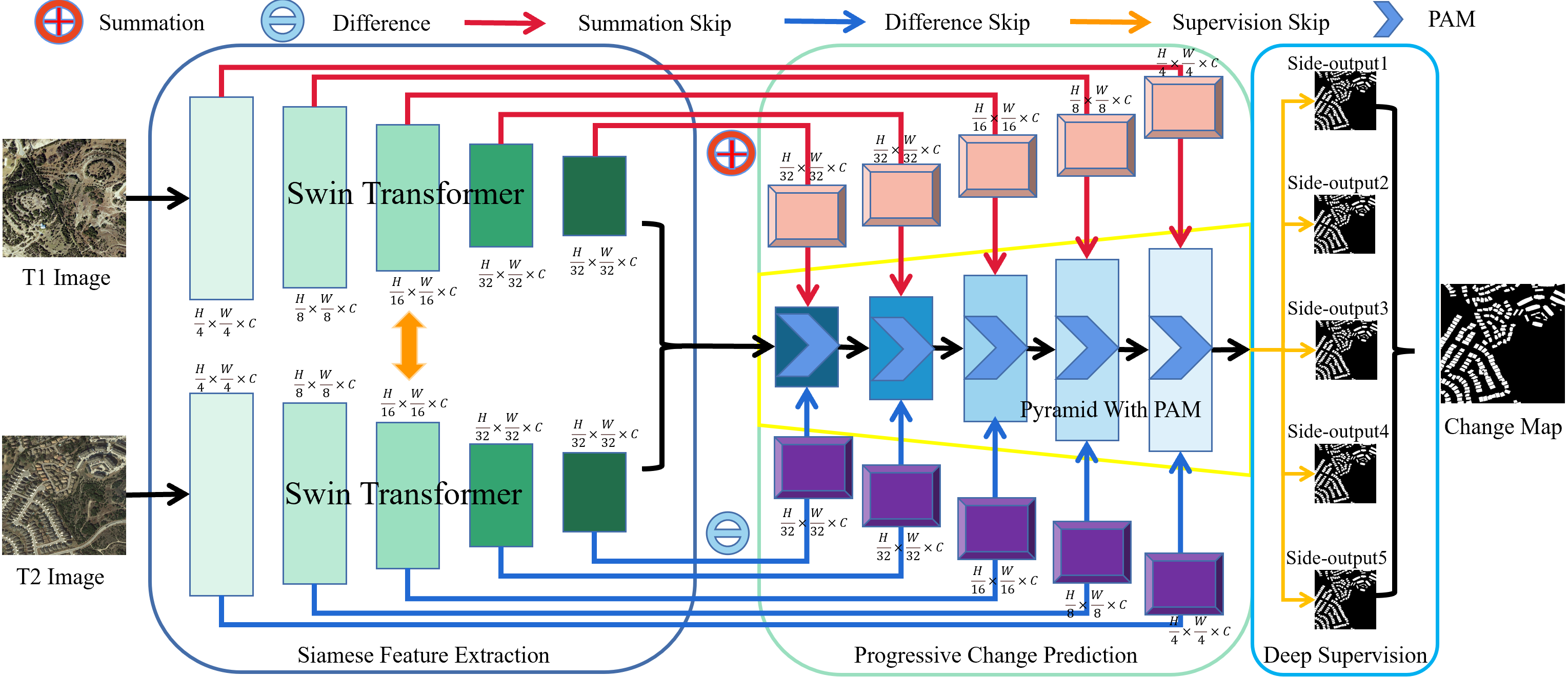

In the remote sensing field, Change Detection (CD) aims to identify and localize the changed regions from dual-phase images over the same places. Recently, it has achieved great progress with the advances of deep learning. However, current methods generally deliver incomplete CD regions and irregular CD boundaries due to the limited representation ability of the extracted visual features. To relieve these issues, in this work we propose a novel Transformer-based learning framework named TransY-Net for remote sensing image CD, which improves the feature extraction from a global view and combines multi-level visual features in a pyramid manner. More specifically, the proposed framework first utilizes the advantages of Transformers in long-range dependency modeling. It can help to learn more discriminative global-level features and obtain complete CD regions. Then, we introduce a novel pyramid structure to aggregate multi-level visual features from Transformers for feature enhancement. The pyramid structure grafted with a Progressive Attention Module (PAM) can improve the feature representation ability with additional inter-dependencies through spatial and channel attentions. Finally, to better train the whole framework, we utilize the deeply-supervised learning with multiple boundary-aware loss functions. Extensive experiments demonstrate that our proposed method achieves a new state-of-the-art performance on four optical and two SAR image CD benchmarks. The source code is released at this URL.

@article{yan2023transy,

title={TransY-Net: Learning Fully Transformer Networks for Change Detection of Remote Sensing Images},

author={Yan, Tianyu and Wan, Zifu and Zhang, Pingping and Cheng, Gong and Lu, Huchuan},

journal={IEEE Transactions on Geoscience and Remote Sensing},

year={2023},

publisher={IEEE}

}

|

|

pdf |

abstract |

bibtex |

arXiv |

code

Recently, change detection (CD) of remote sensing images have achieved great progress with the advances of deep learning. However, current methods generally deliver incomplete CD regions and irregular CD boundaries due to the limited representation ability of the extracted visual features. To relieve these issues, in this work we propose a novel learning framework named Fully Transformer Network (FTN) for remote sensing image CD, which improves the feature extraction from a global view and combines multi-level visual features in a pyramid manner. More specifically, the proposed framework first utilizes the advantages of Transformers in long-range dependency modeling. It can help to learn more discriminative global-level features and obtain complete CD regions. Then, we introduce a pyramid structure to aggregate multi-level visual features from Transformers for feature enhancement. The pyramid structure grafted with a Progressive Attention Module (PAM) can improve the feature representation ability with additional interdependencies through channel attentions. Finally, to better train the framework, we utilize the deeply-supervised learning with multiple boundaryaware loss functions. Extensive experiments demonstrate that our proposed method achieves a new state-of-the-art performance on four public CD benchmarks. For model reproduction, the source code is released at this URL.

@inproceedings{yan2022fully,

title={Fully transformer network for change detection of remote sensing images},

author={Yan, Tianyu and Wan, Zifu and Zhang, Pingping},

booktitle={Proceedings of the Asian Conference on Computer Vision},

pages={1691--1708},

year={2022}

}

|

|

competition website |

winner announcement |

IEEE news |

pdf |

abstract |

bibtex |

arXiv |

code

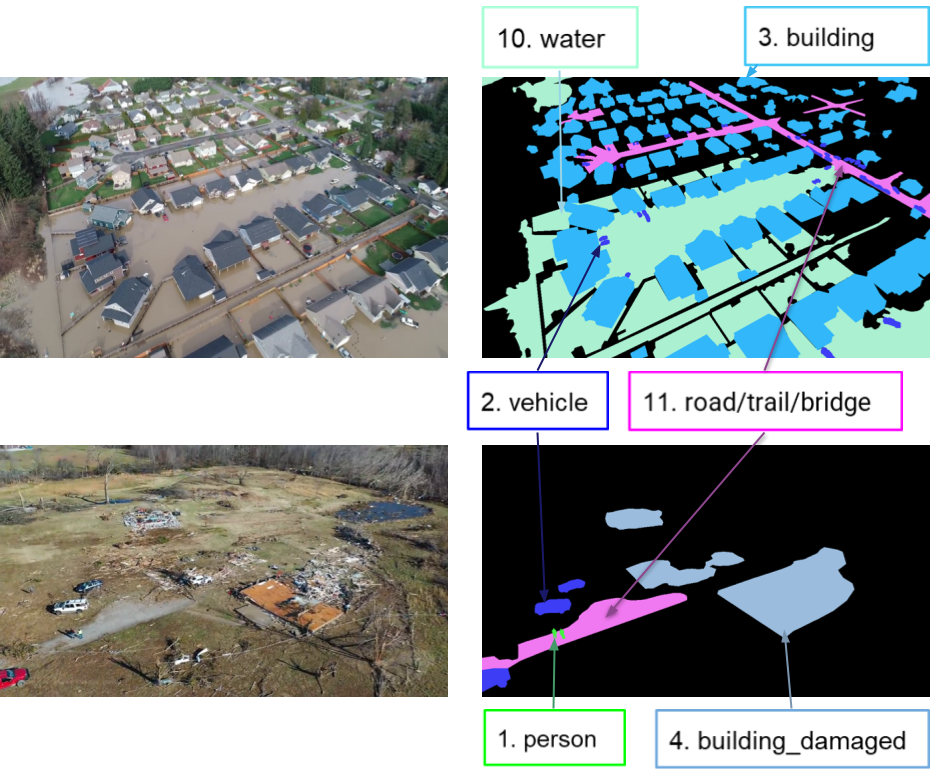

This article describes the 2023 IEEE Low-Power Computer Vision Challenge (LPCVC). Since 2015, LPCVC has been an international competition devoted to tackling the challenge of computer vision (CV) on edge devices. Most CV researchers focus on improving accuracy, at the expense of ever-growing sizes of machine models. LPCVC balances accuracy with resource requirements. Winners must achieve high accuracy with short execution time when their CV solutions run on an embedded device, such as Raspberry PI or Nvidia Jetson Nano. The vision problem for 2023 LPCVC is segmentation of images acquired by Unmanned Aerial Vehicles (UAVs, also called drones) after disasters. The 2023 LPCVC attracted 60 international teams that submitted 676 solutions during the submission window of one month. This article explains the setup of the competition and highlights the winners' methods that improve accuracy and shorten execution time.

@article{chen20242023,

title={2023 Low-Power Computer Vision Challenge (LPCVC) Summary},

author={Chen, Leo and Boardley, Benjamin and Hu, Ping and Wang, Yiru and Pu, Yifan and Jin, Xin and Yao, Yongqiang and Gong, Ruihao and Li, Bo and Huang, Gao and others},

journal={arXiv preprint arXiv:2403.07153},

year={2024}

}

|

|

International Journal of Computer Vision (IJCV)

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025 ACM Multimedia (MM), 2024 IEEE International Conference on Multimedia and Expo (ICME), 2024, 2025 IEEE Transactions on Circuits and Systems for Video Technology (TCSVT) |

|

|